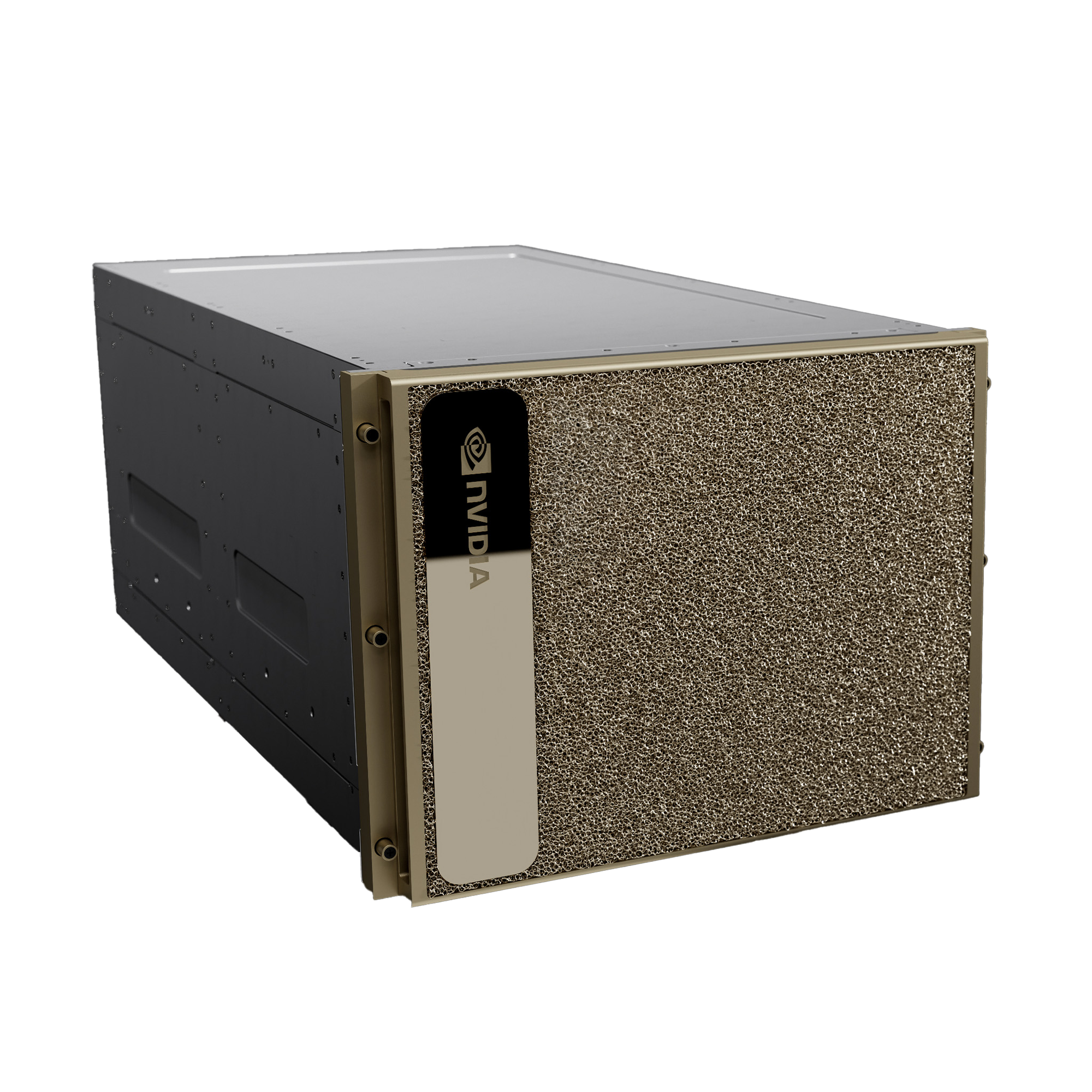

The Gold Standard for AI Computing

NVIDIA DGX H100, a part of the DGX platform, stands at the forefront of AI-driven business solutions. Leveraging the revolutionary NVIDIA H100 Tensor Core GPU, it excels in maximizing AI throughput. This system is pivotal in enhancing natural language processing, recommender systems, and data analytics. Available for on-premises deployment, the DGX H100 offers diverse access options, enabling businesses to tackle their most significant challenges efficiently using advanced AI technology.

NVIDIA DGX H100 The Epicenter of AI Innovation

Positioned as the heart of your enterprise's AI center of excellence, DGX H100 marks the fourth generation of the world's premier purpose-built AI infrastructure. It’s a fully optimized hardware and software platform, supporting a wide range of NVIDIA AI software solutions, a robust third-party ecosystem, and expert advice from NVIDIA professional services. Renowned for its reliability, DGX H100 is utilized by thousands of clients globally across numerous industries.

Breaking AI Scale Barriers

- High Performance: DGX H100, featuring the NVIDIA H100 Tensor Core GPU, redefines AI scalability and performance.

- Advanced Networking: Boasts 9X more performance and 2X faster networking, thanks to NVIDIA ConnectX®-7 SmartNICs.

- Ideal for Demanding AI Tasks: Perfect for generative AI and deep learning recommendation models.

Specifications

| Specification | Details |

|---|---|

| GPU | 8x NVIDIA H100 Tensor Core GPUs |

| GPU Memory | 640GB total |

| Performance | 32 petaFLOPS FP8 |

| NVIDIA® NVSwitch™ | 4x |

| System Power Usage | 10.2kW max |

| CPU | Dual Intel® Xeon® Platinum 8480C Processors (112 Cores total, 2.00 GHz Base, 3.80 GHz Max Boost) |

| System Memory | 2TB |

| Networking | 4x OSFP ports serving 8x single-port NVIDIA ConnectX-7 VPI (Up to 400Gb/s InfiniBand/Ethernet), 2x dual-port QSFP112 NVIDIA ConnectX-7 VPI (Up to 400Gb/s InfiniBand/Ethernet) |

| Management Network | 10Gb/s onboard NIC with RJ45, 100Gb/s Ethernet NIC, Host baseboard management controller (BMC) with RJ45 |

| Storage | OS: 2x 1.92TB NVMe M.2, Internal storage: 8x 3.84TB NVMe U.2 |

| Software | NVIDIA AI Enterprise (Optimized AI software), NVIDIA Base Command (Orchestration, scheduling, and cluster management), DGX OS / Ubuntu / Red Hat Enterprise Linux / Rocky – Operating System |

| Support | 3-year business-standard hardware and software support |

| System Weight | 287.6lbs (130.45kgs) |

| Packaged System Weight | 376lbs (170.45kgs) |

| System Dimensions | Height: 14.0in (356mm), Width: 19.0in (482.2mm), Length: 35.3in (897.1mm) |

| Operating Temperature Range | 5–30°C (41–86°F) |

Empowered by NVIDIA Base Command

NVIDIA Base Command empowers the DGX platform, enabling enterprises to harness NVIDIA's cutting-edge software innovations. This infrastructure, including NVIDIA AI Enterprise, offers a suite of software tailored to streamline AI development and deployment.

Flexible, Leadership-Class Infrastructure

DGX H100 aligns with your organization's IT framework and practices, available for on-premises installation or via various managed and colocation options. With DGX-Ready Lifecycle Management, enterprises can maintain their deployments at the forefront of technology, seamlessly integrating DGX H100 into existing IT infrastructures without burdening IT teams. This approach enables organizations to leverage AI for immediate business impact.