The World’s Most Powerful GPU for AI and HPC

The NVIDIA H200 Tensor Core GPU supercharges generative AI and HPC workloads with enhanced performance and memory capabilities. As the first GPU with HBM3e, the H200’s larger and faster memory fuels the acceleration of generative AI and LLMs while advancing scientific computing for HPC workloads.

NVIDIA HGX™ H200 Solutions by AMAX

AceleMax X-88-CH200

8U HGX H200 System

| Component | Specification |

|---|---|

| CPU | 2x AMD EPYC™ 9004 Series Processors |

| GPU | 8x NVIDIA H200 SXM5 |

| Memory | Up to 6TB 4800Mhz DDR5 RAM over with 24 DIMMs |

| Storage | 16x 2.5” hot swappable NVMe drives + 2x SATA drives 1x M.2 NVMe |

| Networking | Flexible configuration options can be adapted for specific customer requirements |

| Cooling | Air Cooling |

AceleMax X-48-CH200

4U Liquid Cooled HGX H200

| Component | Specification |

|---|---|

| CPU | 4th/5th Gen Intel® Xeon® Scalable Processor |

| GPU | 4x NVIDIA HGX H200 SXM5 GPU |

| Memory | 32 DIMM slots supporting max 8TB DDR5-5600 |

| Storage | 6x 2.5” hot-swap NVMe/SATA/SAS drive bays |

| Networking | 2x 10G NIC |

| Cooling | 4-GPU H200 SXM5: Air Cool OR 8-GPU H200 SXM5: Liquid Cool |

NVIDIA H200 GPU

Built on the NVIDIA Hopper architecture, the NVIDIA H200 stands out as the first GPU to deliver 141 gigabytes (GB) of HBM3e memory, achieving speeds up to 4.8 terabytes per second (TB/s). This capacity is almost twice that of the NVIDIA H100 Tensor Core GPU, with a 1.4 times increase in memory bandwidth. The enhanced size and speed of the H200's memory not only speed up generative AI and large language models but also boost scientific computing for HPC tasks, improving energy efficiency and reducing overall costs.

| Llama2 70B Inference | GPT-3 175B Inference | HPC Performance |

|---|---|---|

| 1.9X | 1.6X | 110X |

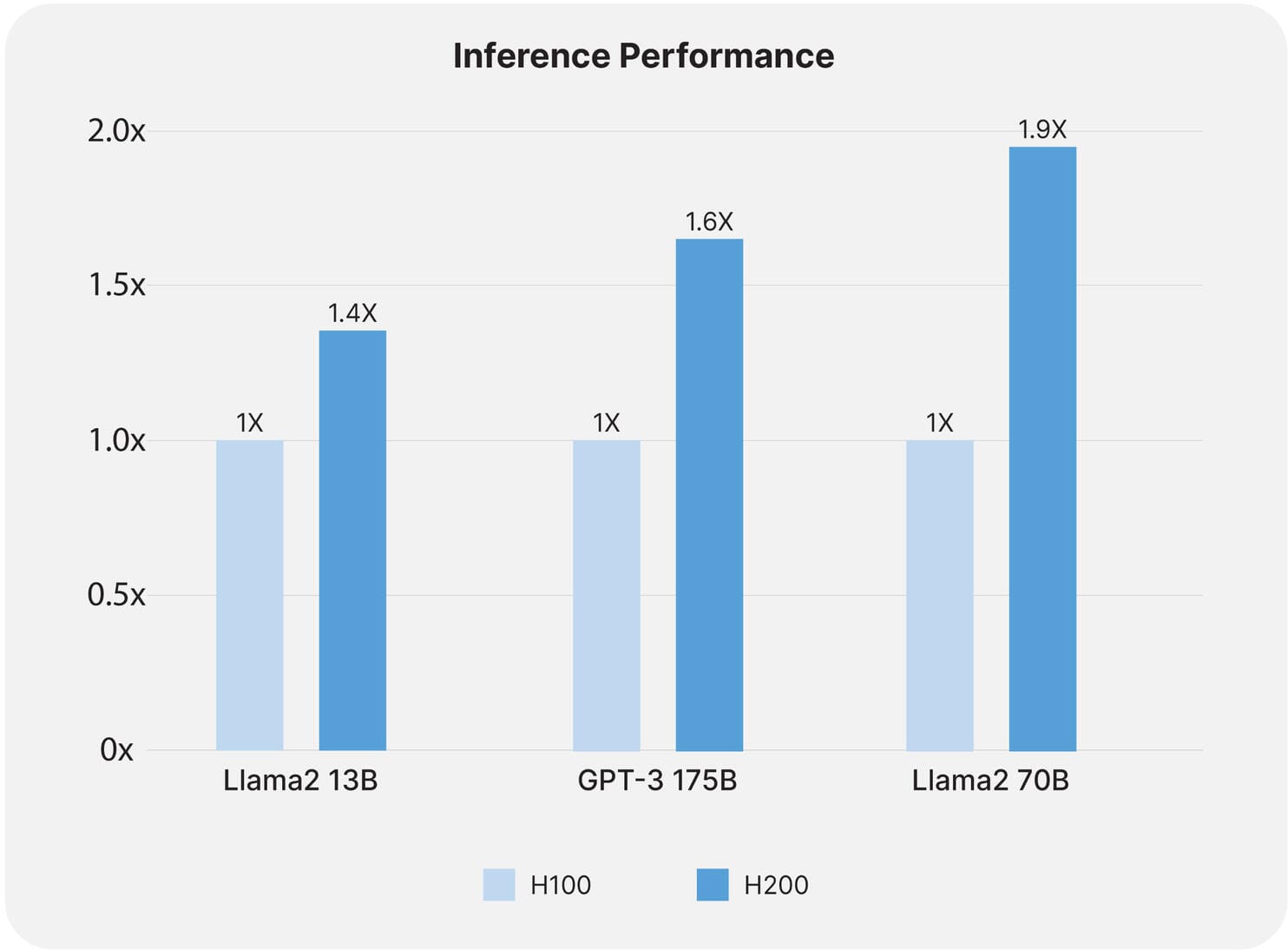

Upgraded LLM Inference Capabilities

The H200 significantly increases inference performance, offering up to nearly double the speed of H100 GPUs for processing LLMs such as Llama2.

The H200's Hopper architecture is designed to meet the demanding requirements of modern AI workloads, making it an ideal solution for enterprises looking to optimize their AI infrastructure for better performance and scalability.

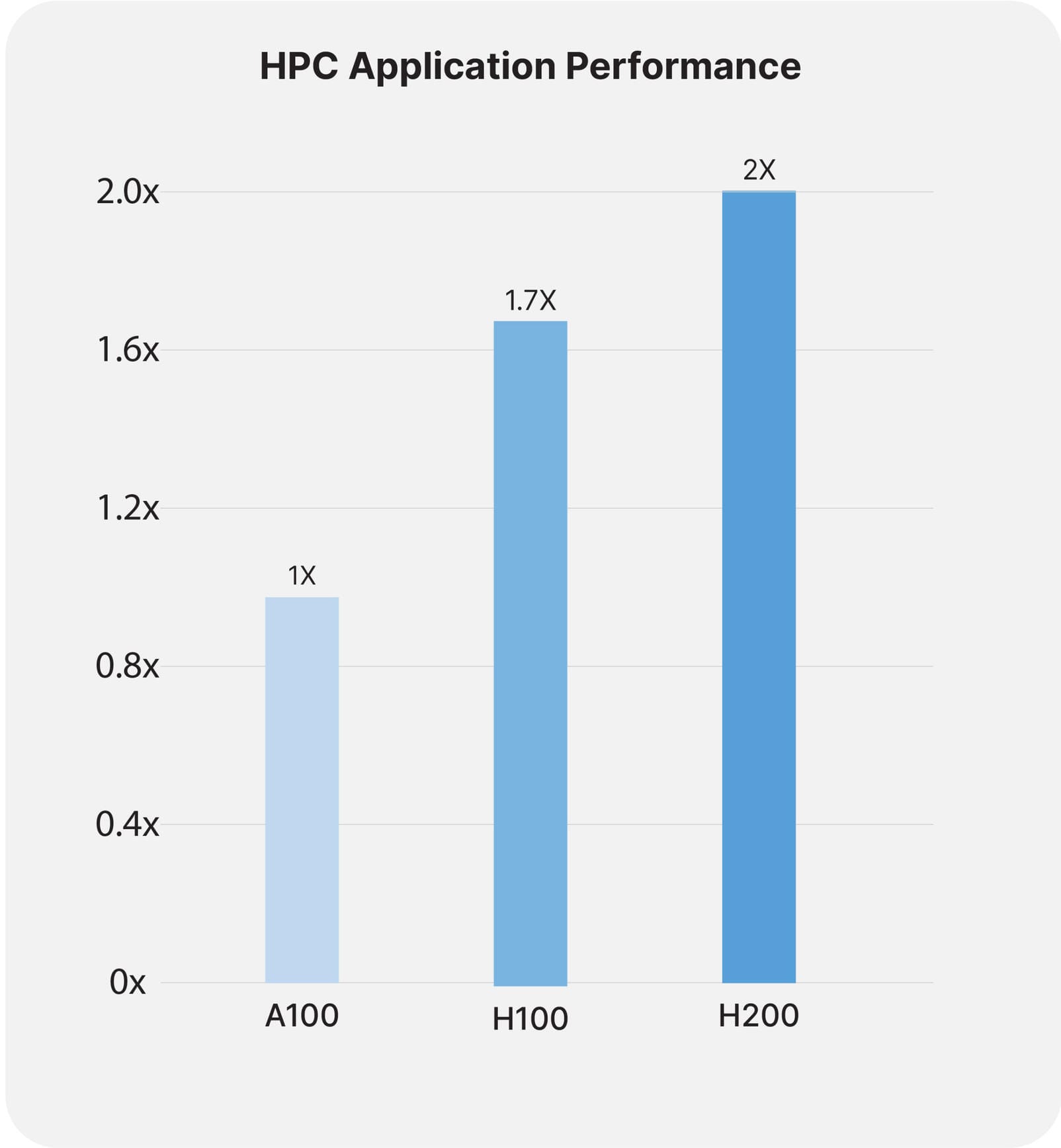

HPC Performance

Memory bandwidth is vital for HPC applications because it facilitates quicker data movement, minimizing bottlenecks in complex processing tasks. In memory-demanding HPC activities such as simulations, scientific investigations, and artificial intelligence, the enhanced memory bandwidth of the H200 allows for efficient data access and handling, resulting in results up to 110 times faster than CPUs.

AMAX as your NVIDIA H200 Solution Provider

At AMAX we specialize in designing and building on-premises compute solutions to empower and accelerate your AI and HPC workloads.