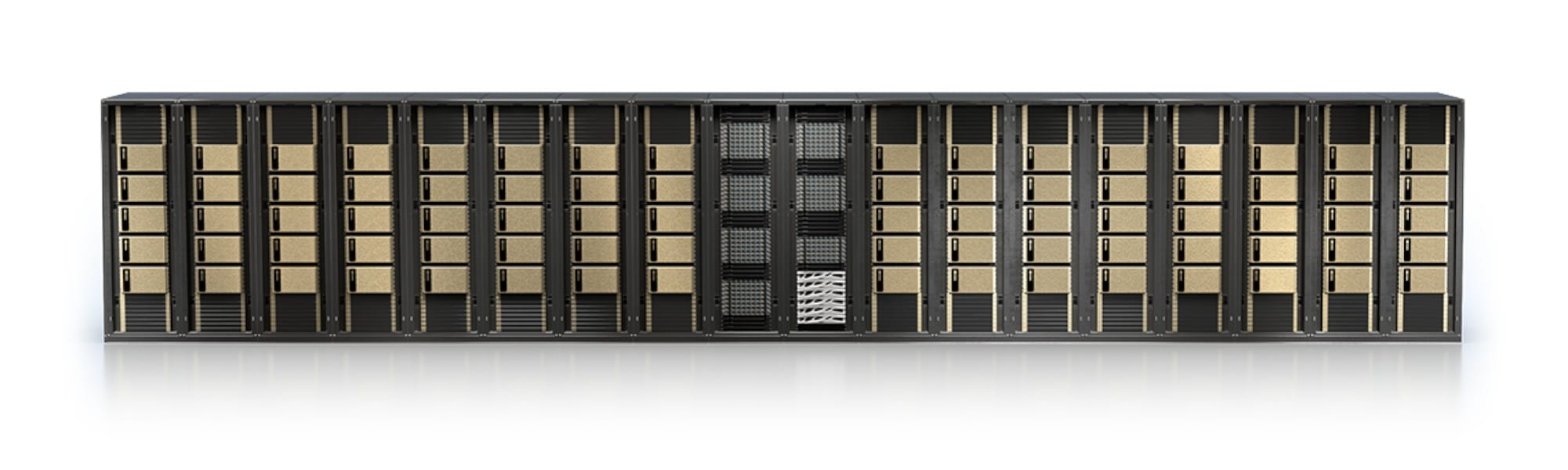

Build Your NVIDIA DGX BasePOD with AMAX

Engineer your BasePOD solution with AMAX and discover what is possible today for your Enterprise Deployment for High Performance Computing and Artificial Intelligence use cases such as Large Language Models (LLMs) with Retrieval Augmented Generation (RAG).

NVIDIA DGX BasePOD and SuperPOD solutions, provided by AMAX, offer streamlined infrastructure and software you need to accelerate AI deployment, while also eliminating design complexities and management challenges. Step into the future with AMAX’s tailored solutions for the NVIDIA DGX platform. AMAX adapts proven reference architectures to align perfectly with your compute requirements.

Through intelligent engineering design, AMAX builds total computing solutions for the needs of enterprises navigating the demands of accelerated computing.

Ready to Build a DGX Solution?

Contact our engineering team today to discuss your project.

Get In Touch

Precision Engineering

At AMAX, our team of experts are here to offer guidance into accelerated computing by transforming IT component building blocks into specialized solutions for AI, healthcare, semiconductor, automobile, telecommunications, and other sectors. We invent Gap Technologies to develop solutions that do not exist in the market today. Let’s work with you to engineer your server infrastructure built on the NVIDIA DGX scalable system.

Scalable Custom and Reference GPU POD Designs for every Workload

We initiate our process with a rigorous evaluation of your computational needs, tailored to the enterprise scale. Armed with this foundational understanding, we proceed to select hardware and software components, each optimized for maximal compatibility with NVIDIA DGX technology. The end result is a bespoke computing cluster, purpose-built to advance your enterprise’s AI and High Performance Computing capabilities.

Expert Guidance at Your Fingertips

We ensure a successful deployment that perfectly aligns with your objectives. Whether you’re navigating complex configurations or seeking optimizations for peak performance, our architects stand ready to support your journey, making your vision a reality.

Consult with our DGX solutions architects to bring your cluster to life.

Optimized for Success

We take care of every aspect, from cluster bring-up and troubleshooting to meticulous performance validation, configuration optimization, and rigorous benchmarking. With our expertise, you can trust in a flawless operation that maintains peak performance throughout your cluster’s lifecycle.

Total Visibility

We facilitate remote access for convenient acceptance testing and validation. This feature empowers you to oversee and verify your cluster’s performance and functionality from anywhere, ensuring a seamless and efficient deployment process.

Hassle-free Installation

Our team of H100 BasePOD/SuperPOD solutions architects delivers and sets up your cluster on-site with care and expertise. We make sure every detail is taken care of, ensuring your cluster is ready to perform.

Continuous Support

Our post-sales engineering services and cluster lifecycle management offer 24/7 support. We are dedicated to ensuring maximum uptime for your deployment, addressing any evolving needs, and continually optimizing your cluster’s performance, so you can focus on your core objectives with peace of mind.