AMAX Engineering transforms NVIDIA reference designs into turnkey AI Factories through comprehensive system validation and customer enablement.

Faster time-to-value. Our expertise in AI networking fabrics, storage, rack-level power distribution and liquid-cooling ensures production-ready infrastructure from day one. We deliver a cloud-native experience on-premise. Pre-configured Kubernetes clusters, NVIDIA operators, and automation for provisioning, monitoring, and lifecycle management eliminate months of deployment friction. Your teams can run AI workloads using the same patterns they already know —no proprietary lock-in, no learning curve.

Built to scale. Whether deploying your first cluster or expanding existing operations, AMAX enables predictable performance and operational consistency. Configuration control, workload orchestration, and validated reference architectures allow enterprises to scale with confidence while reducing total operational costs.

The result: production-ready AI infrastructure that integrates seamlessly with your existing toolchain, delivered with the support and expertise to ensure your GPU investment drives immediate business value.

Designed for Repeatability and Growth

Standard configurations reduce integration risk and configuration drift, while preserving performance and operational characteristics as environments expand. Capacity can grow in planned waves without redesign, with network, power, and thermal requirements considered up front.

Compute Architecture for AI Workloads

High-density GPU systems are optimized for multi-GPU and multi-node scaling with balanced CPU and memory. Pre-validated configurations speed deployment and help avoid bottlenecks as workloads move into production.

High-Performance Network Fabric

Low-latency, high-bandwidth fabrics are designed and tuned for distributed training and inference. Standardized topology and cabling practices help performance scale consistently as clusters grow and replicate.

Storage Architecture Optimized for AI

High-throughput, low-latency storage tiers support dataset reads, checkpointing, and iterative training. Storage is validated with real AI workloads and scales in step with compute and networking.

Built for Mission-Critical AI

The architecture targets environments that require high performance, high availability, and low integration risk—including healthcare, life sciences, semiconductor design, and industrial AI.

NVIDIA AI Software Platforms

AMAX’s Systems have been fully tested and validated for performance, reliability, and compatibility with the NVIDIA AI software stack including NVIDIA AI Enterprise, NVIDIA Omniverse, and NVIDIA Run:ai, enabling the building and deployment of production-ready agentic AI and physical AI systems anywhere, across clouds, data centers, or at the edge.

NVIDIA AI Enterprise

NVIDIA AI Enterprise is a cloud-native suite of software tools, libraries, and frameworks, including NVIDIA NIM and NeMo microservices, that accelerate and simplify the development, deployment, and scaling of AI applications.

NVIDIA Omniverse

NVIDIA Omniverse is a platform of APIs, SDKs, and services that enable developers to integrate OpenUSD, NVIDIA RTX™ rendering technologies, and generative physical AI into existing software tools and simulation workflows for industrial and robotic use cases.

NVIDIA Run:ai

NVIDIA Run:ai accelerates AI operations with dynamic orchestration across the AI life cycle, maximizing GPU efficiency, scaling workloads, and integrating seamlessly into hybrid AI infrastructure with zero manual effort.

AMAX AI Factory Product Portfolio

AMAX Engineering turns NVIDIA reference designs into production-ready AI Factories through end-to-end system validation across GPU/CPU/memory balance, tuned InfiniBand and Ethernet fabrics, AI-optimized storage I/O, and rack-level power plus liquid-ready thermal design. This is supported by documented bills of materials and repeatable build procedures. The result is deterministic performance and day-one operability, with automation for provisioning, monitoring, and lifecycle upgrades, enabling enterprises to scale from a first cluster to multi-site global capacity with consistent configuration control and confidence.

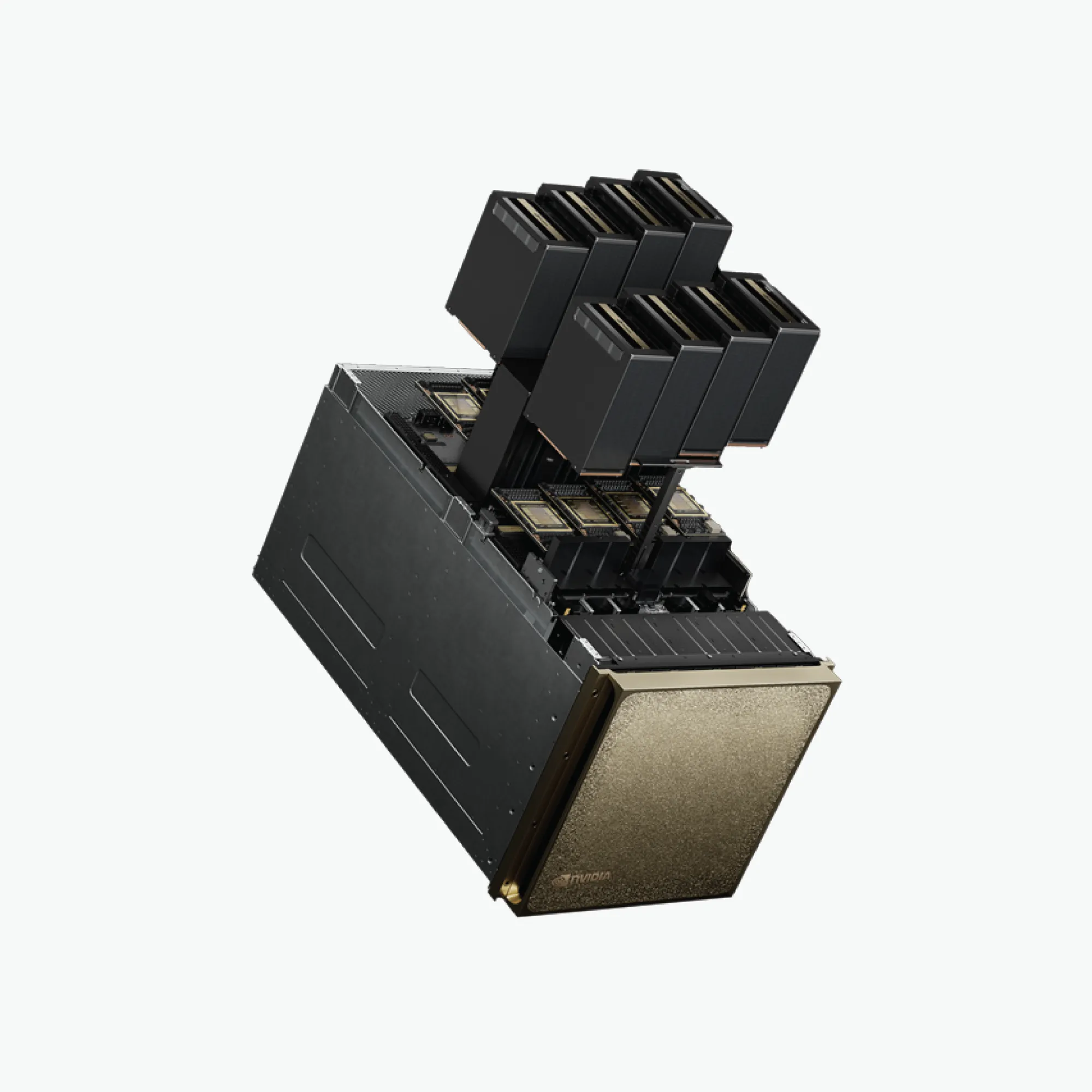

NVIDIA DGX™ GB300

Liquid-cooled, rack-scale platform with 72 GPUs for large-scale AI reasoning and deployment.

NVIDIA DGX™ GB200

Rack-scale system with Grace Blackwell for unified memory and accelerated AI performance.

NVIDIA DGX™ B300

Blackwell Ultra system built for MGX and enterprise racks, optimized for AI pipelines.

NVIDIA DGX™ B200

Blackwell-powered system for next-gen AI in a standard enterprise form factor.

AMAX RackScale 32 with NVIDIA™ HGX B300

High-density, rack-scale solution engineered for large-scale enterprise AI workloads

LiquidMax® RackScale 64

High-Density Liquid-Cooled AI Rack Solution