Accelerate

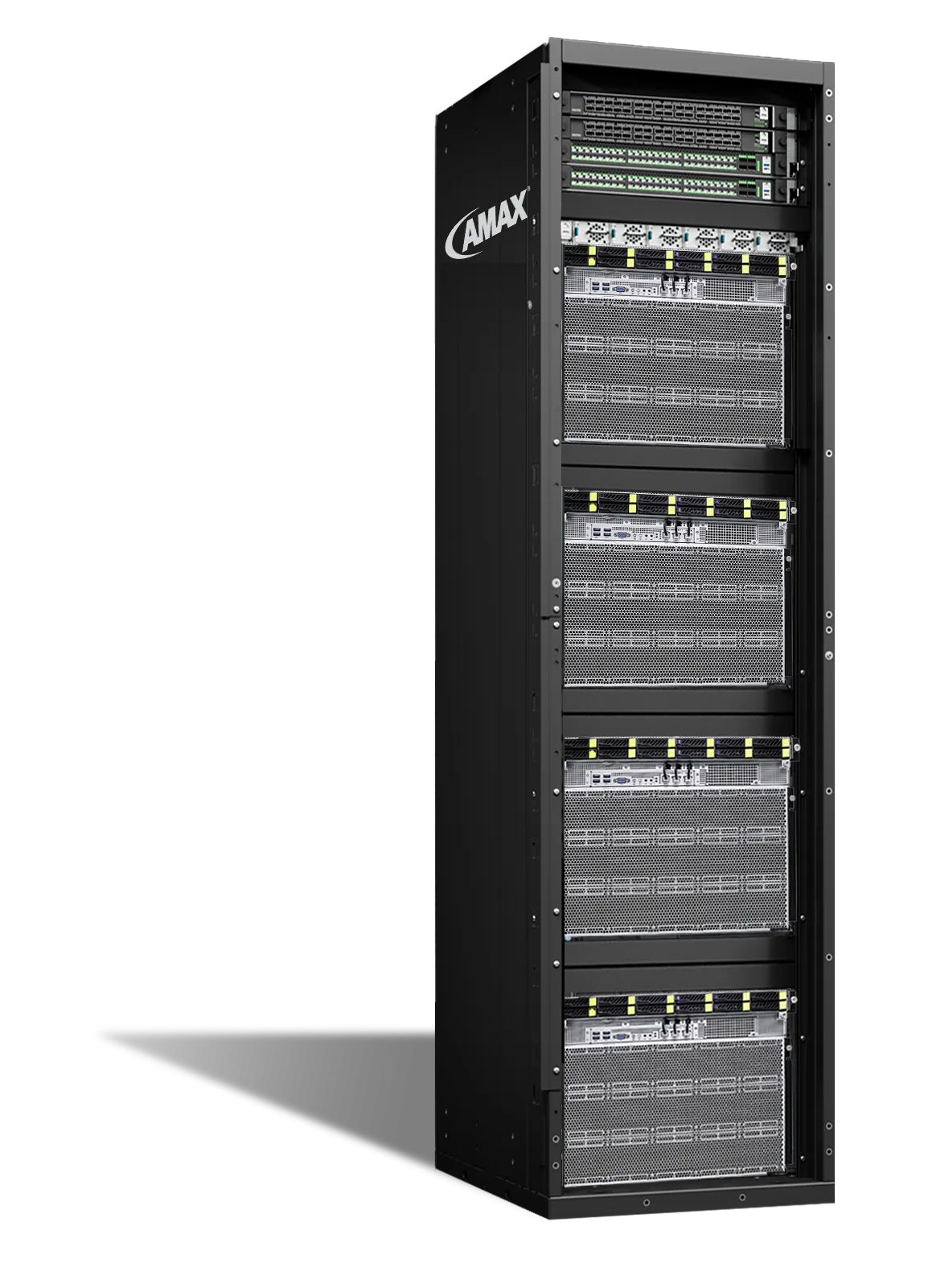

On-Prem AI with AMAX

High-density, rack-scale solution engineered for large-scale enterprise AI workloads with 32 NVIDIA HGX™ B300 GPUs

Tensor/Transformer Cores

Up to 576 specialized cores that accelerate transformer operations (e.g., attention layers) critical to LLM performance.

High Memory Capacity

Up to 8.4TB of HBM3e memory, enabling efficient handling of massive models and long context windows without bottlenecks.

In-Node Bandwidth

NVIDIA NVLink/NVSwitch interconnect keeps GPUs fully utilized across large-scale training workloads.

High-Speed Networking

800Gbps NVIDIA InfiniBand for rapid multi-node synchronization across distributed AI clusters.

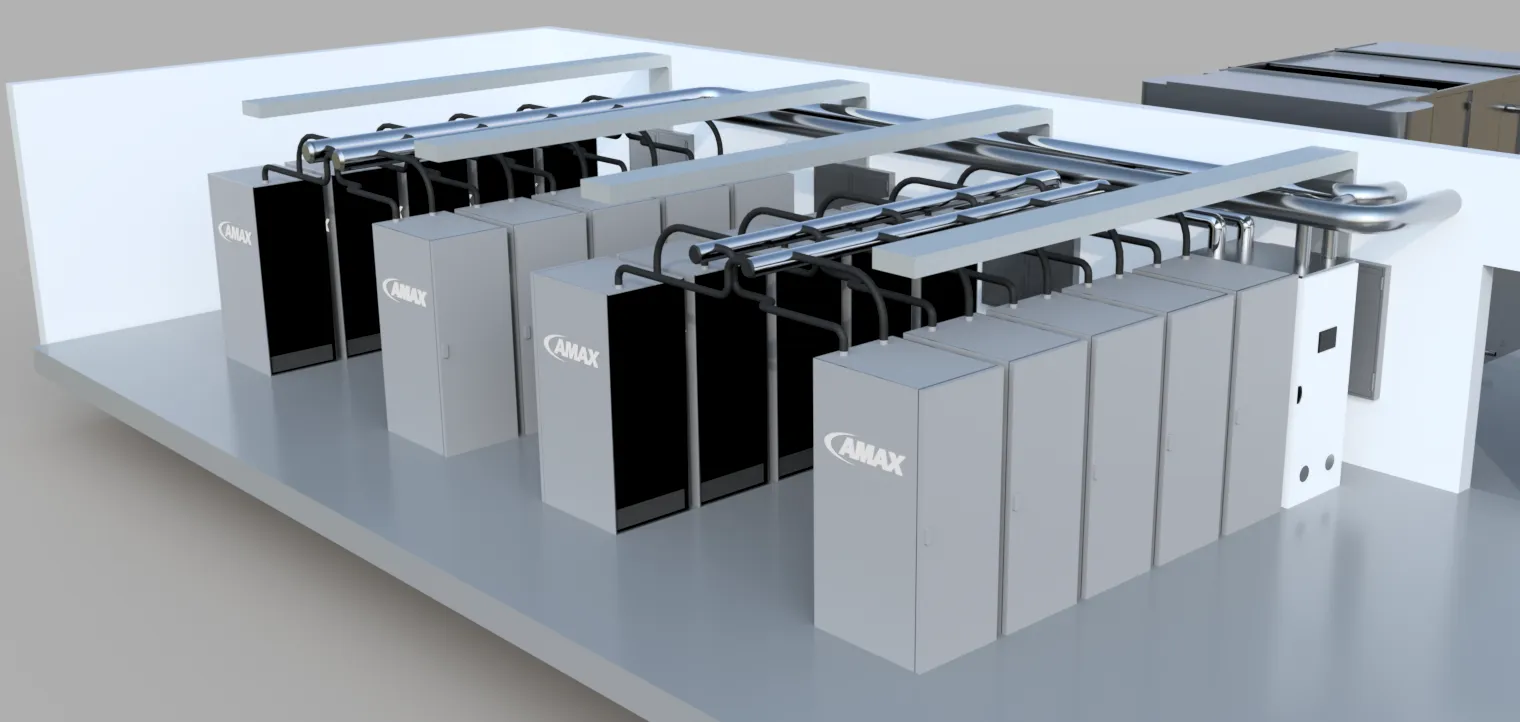

Solution Architecture

AMAX's solution architects optimize IT configurations for performance, scalability, and industry-specific reliability.

Networking

AMAX designs custom networking topologies to enhance connectivity and performance in AI and HPC environments.

Thermal Management

AMAX implements innovative cooling technologies that boost performance and efficiency in dense computing setups.

Compute Optimization

AMAX ensures maximum performance through benchmarking and testing, aligning hardware and software for AI workloads.

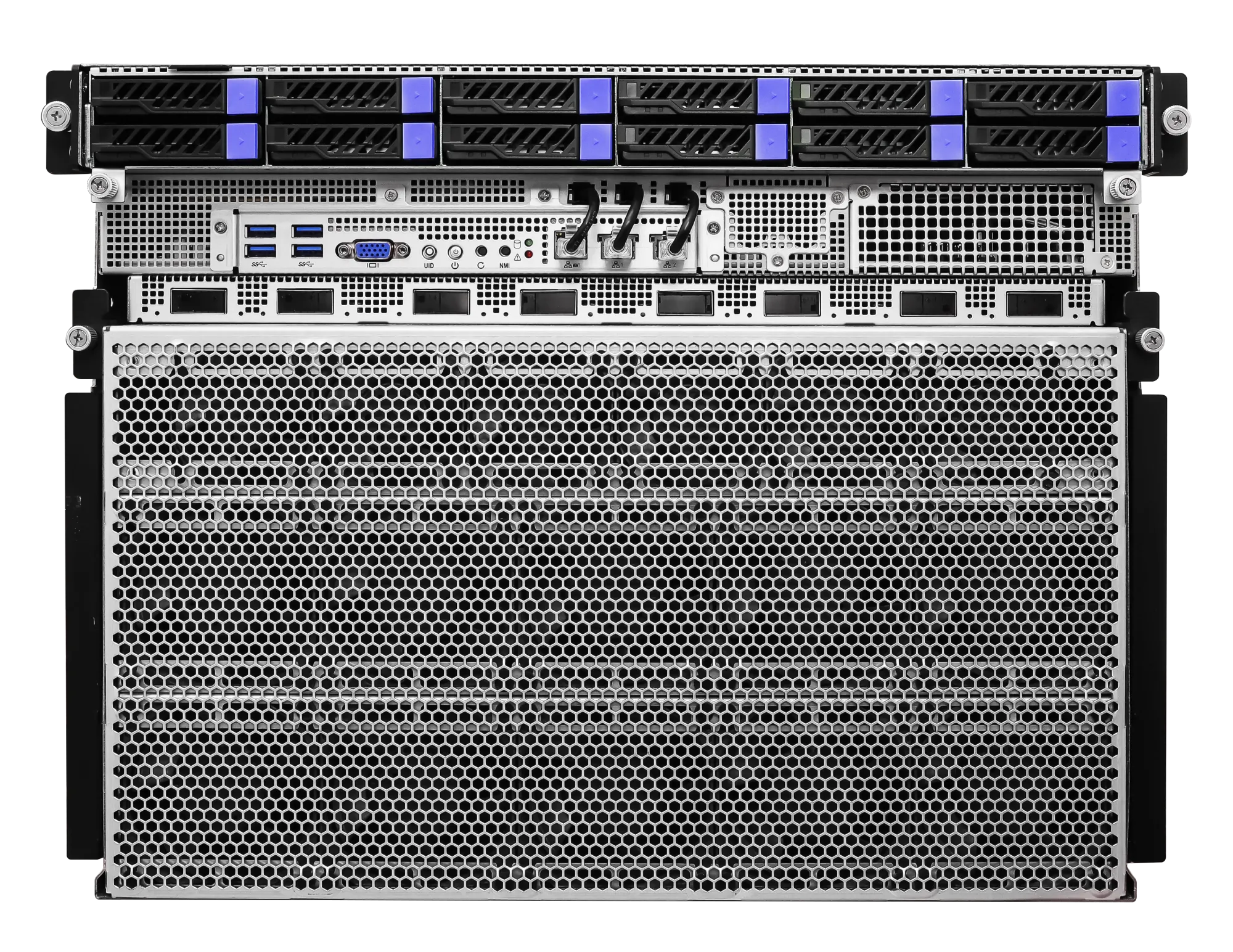

AceleMax® AXG-828U

The AceleMax® AXG-828U pairs 8x NVIDIA HGX B300 GPUs with dual Intel Xeon 6700-series CPUs, PCIe Gen 5 NVMe storage, and 12 expansion slots. Designed for dense AI deployments, it delivers high-speed interconnects and efficient thermal control in a 8U rackmount form factor.

Datasheet

Next Level Training Performance

The second generation Transformer Engine with FP8 enables 4x faster training for large models like Llama 3.1 405B. Combined with NVLink at 1.8 TB/s, InfiniBand networking, and Magnum IO software, it scales efficiently across enterprise clusters.

Real Time Inference

HGX B300 delivers up to 11x higher inference performance over the Hopper generation. Blackwell Tensor Cores with TensorRT LLM innovations accelerate inference for Llama 3.1 405B and other large models.

Fully Managed AI Deployment

AMAX's approach to AI solutions begins with intelligent design, emphasizing the creation of high-performance computing and network infrastructures tailored to AI applications. We guide each project from concept to deployment, ensuring systems are optimized for both efficiency and future scalability.

Activate Your AI Infrastructure Instantly with HostMax™

HostMax™ is AMAX’s in-house deployment service that lets you power on and operate your liquid-cooled AI systems as soon as they’re built. Instead of waiting for colocation space, HostMax™ provides immediate hosting at AMAX’s facility, enabling a direct transition from assembly to deployment for testing, validation, and early production.

Learn More

AMAX RackScale 32

AMAX RackScale 32 combines compute performance with efficient scalability for industries including healthcare, finance, public sectors, manufacturing, neoclouds, and research institutions.

- Dual Socket Intel® Xeon® 6700E/6700P series processors

- 32x NVIDIA Blackwell Ultra GPUs

- Up to 8.4TB total HBM3e GPU memory per rack

- Total FP4 Tensor Core 576 PFLOPS