Stable Diffusion, developed by stability.ai, is an open-source text-to-image model that empowers you to swiftly create artistic visuals from natural language prompts. In a matter of seconds, this generative AI tool transforms your textual input into compelling visual compositions.

While AMD announced the latest ROCM v6.0 at the “Advancing AI” event, v6.0 is not available for download yet at the ROCM Developer Hub. The latest available for download is still v5.7.1 which is what this blog post will be focused on.

There are three key components that are necessary to setup a production-ready enterprise-grade server equipped:

- ROCM – the equivalent of CUDA foundation for NVIDIA GPUs.

- 3rd Party Libraries such as PyTorch and BitsandBytes, the project ported for ROCM stack that is essential for any quantization work.

- Utilities – rocm-smi, rocminfo, and nvtop.

Our Ubuntu Linux server had the following hardware configuration:

- OS: Ubuntu 22.04 LTS

- GPU: 4x Mi250 (gfx90a)

- CPU: Dual AMD EPYC 7643 48-Core Processor

- RAM: 512GB

- Storage: 1TB

This blog will provide the technical steps necessary to create a Stable Diffusion server accessible via URL enabling seamless remote access.

This setup is best suited for businesses seeking to leverage their existing hardware infrastructure for local image generation. Offering employees nearly unlimited access to create images without the concern of rapidly escalating cloud service bills.

The Need for On-Premises Stable Diffusion

Despite the potential advantages of utilizing cloud services for running the Stable Diffusion model, there are compelling reasons to consider on-premises deployment. When relying on limited local compute resources, the model may take longer to generate high-quality images; however, on-premises hosting offers distinct benefits.

By opting for on-premises deployment, organizations gain greater control over their computational infrastructure, ensuring enhanced data security and regulatory compliance. This approach mitigates concerns related to data privacy and external dependencies, providing a more secure and tailored environment.

Moreover, running the Stable Diffusion model on-premises allows organizations to maintain autonomy over their computational processes, eliminating the need for reliance on external cloud services. This self-sufficiency can be particularly advantageous for those prioritizing data sovereignty and seeking to optimize performance within their established infrastructure.

Choosing AMAX for Your Deployment

At AMAX, we specialize in transforming IT components into robust, efficient solutions. Our expertise in AI and advanced computing technologies makes us the ideal partner for deploying Stable Diffusion locally.

Setting Up Stable Diffusion on AMD MI250 Hardware

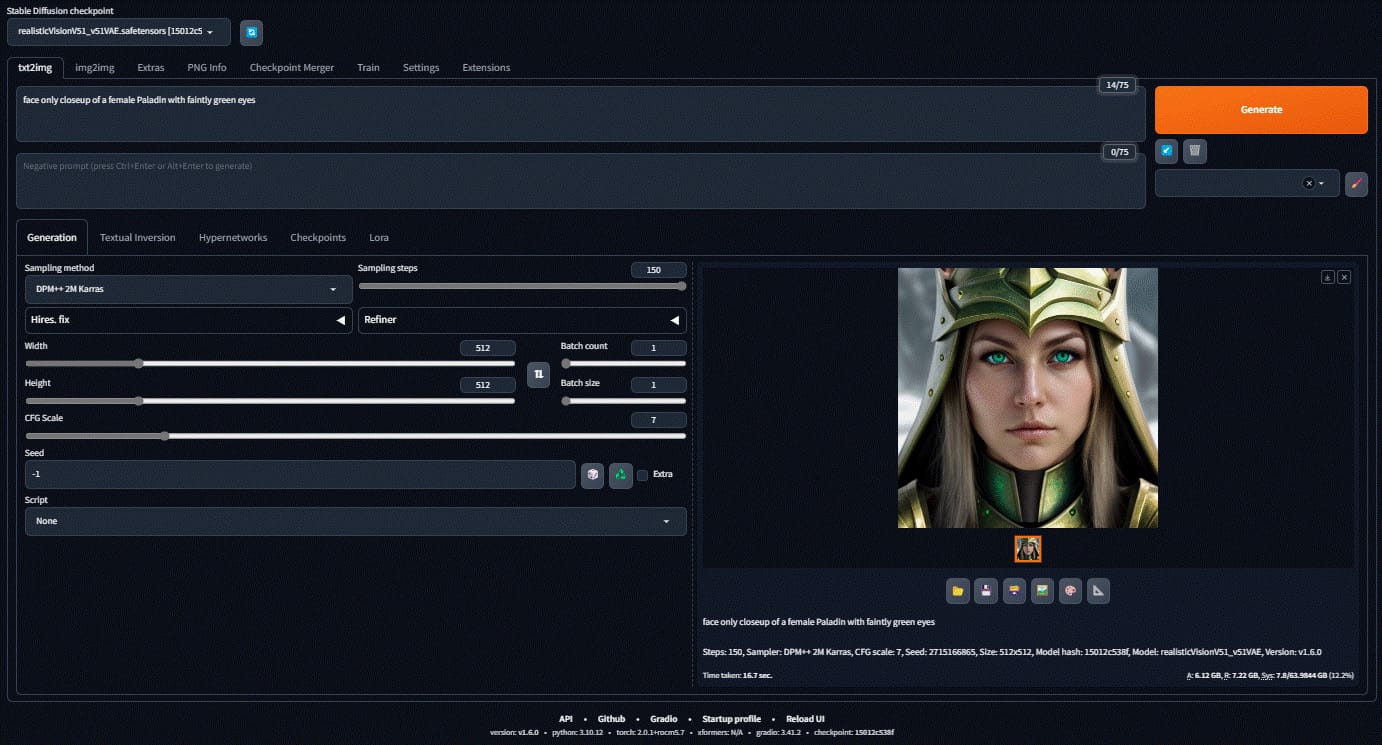

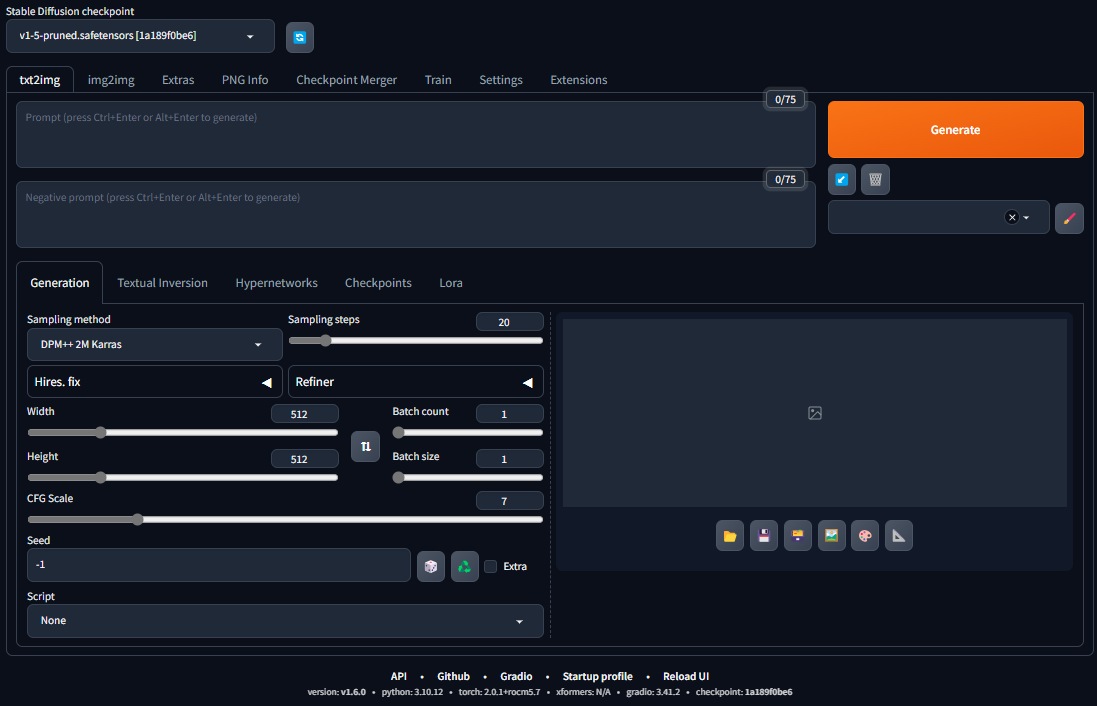

Here is a sneak peek of what the UI will look like.

Here are some sample images created.

Prerequisites

Before setting up your Stable Diffusion server, it’s essential to ensure that you have all the necessary tools and components in place. The following prerequisites are key to a successful installation and smooth operation of the system.

There are three key components that are necessary to setup a production-ready enterprise-grade server equipped:

- ROCM – the equivalent of CUDA foundation for NVIDIA GPUs

- 3rd party libraries such as PyTorch and BitsandBytes, the project ported for ROCM stack that is essential for any quantization work.

- Utilities – rocm-smi, rocminfo, and nvtop

Prepare the Environment – Installation Steps

Our goal in this blog is to provide additional resources to help smooth out the installation process and add insights to issues we encountered and provide quick resolutions for them.

ROCM

What is ROCm?

ROCm is an open-source software stack designed for GPU computations, featuring drivers, tools, and APIs for GPU programming. It allows for customization and is ideal for GPU-accelerated tasks in high-performance computing, AI, scientific computing, and CAD. Powered by AMD's HIP, ROCm enables the creation of portable applications across various platforms. It supports key programming models and is integrated into ML frameworks like PyTorch and TensorFlow. Radeon Software for Linux with ROCm harnesses AMD GPUs for parallel computing, offering a cost-effective alternative to cloud-based solutions. ROCm also extends to Windows with its HIP SDK, providing a range of development features.

Installing ROCm

- On Linux, Quick Start (Linux) — ROCm 5.7.1 Documentation Home

- On Windows, Quick Start (Windows) — ROCm 5.7.1 Documentation Home

Let’s start with the installation of ROCm…

ROCm Repositories

- Download and convert the package signing key

# Make the directory if it doesn't exist yet.

# This location is recommended by the distribution maintainers.

sudo mkdir --parents --mode=0755 /etc/apt/keyrings

# Download the key, convert the signing-key to a full

# keyring required by apt and store in the keyring directory

wget https://repo.radeon.com/rocm/rocm.gpg.key -O - | \

gpg --dearmor | sudo tee /etc/apt/keyrings/rocm.gpg > /dev/null

- Add the repositories

# Kernel driver repository for jammy

sudo tee /etc/apt/sources.list.d/amdgpu.list <<'EOF'

deb [arch=amd64 signed-by=/etc/apt/keyrings/rocm.gpg] https://repo.radeon.com/amdgpu/5.7.1/ubuntu jammy main

EOF

# ROCm repository for jammy

sudo tee /etc/apt/sources.list.d/rocm.list <<'EOF'

deb [arch=amd64 signed-by=/etc/apt/keyrings/rocm.gpg] https://repo.radeon.com/rocm/apt/debian jammy main

EOF

# Prefer packages from the rocm repository over system packages

echo -e 'Package: *\nPin: release o=repo.radeon.com\nPin-Priority: 600' | sudo tee /etc/apt/preferences.d/rocm-pin-600

3. Update the list of packages

sudo apt update

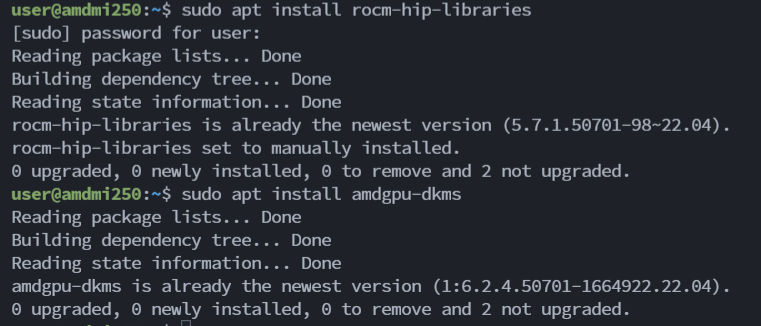

Install drivers

Install the amdgpu-dkms kernel module, aka driver, on your system.

sudo apt install amdgpu-dkms

Install ROCm runtimes

Install the rocm-hip-libraries meta-package. This contains dependencies for most common ROCm applications.

sudo apt install rocm-hip-libraries

Reboot the system

Loading the new driver requires a reboot of the system.

sudo reboot

ROCM Utilities

Run rocminfo and clinfo to verify that ROCm was installed. And rocm-smi will provide a simple interface to monitor Instinct GPU utilization. You can use watch -n 1 rocm-smi to automatically refresh the data every second.

We encountered an interesting issue during the install of rocm-hip-libraries…

user@amdmi250:~/coding/pipline$ sudo apt install rocm-hip-libraries

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

Some packages could not be installed. This may mean that you have requested an impossible situation or if you are using the unstable distribution that some required packages have not yet been created or been moved out of Incoming. The following information may help to resolve the situation:

The following packages have unmet dependencies:

rocm-hip-libraries : Depends: rocm-core (= 5.7.0.50700-63~22.04) but 5.7.1.50701-98~20.04 is to be installed

Depends: rocm-hip-runtime (= 5.7.0.50700-63~22.04) but it is not going to be installed

Depends: hipblas (= 1.1.0.50700-63~22.04) but 1.1.0.50701-98~20.04 is to be installed

Depends: hipblaslt (= 0.3.0.50700-63~22.04) but 0.3.0.50701-98~20.04 is to be installed

Depends: hipfft (= 1.0.12.50700-63~22.04) but 1.0.12.50701-98~20.04 is to be installed

Depends: hipsparse (= 2.3.8.50700-63~22.04) but 2.3.8.50701-98~20.04 is to be installed

Depends: hipsolver (= 1.8.1.50700-63~22.04) but 1.8.2.50701-98~20.04 is to be installed

Depends: rccl (= 2.17.1.50700-63~22.04) but 2.17.1.50701-98~20.04 is to be installed

Depends: rocalution (= 2.1.11.50700-63~22.04) but 2.1.11.50701-98~20.04 is to be installed

Depends: rocblas (= 3.1.0.50700-63~22.04) but 3.1.0.50701-98~20.04 is to be installed

Depends: rocfft (= 1.0.23.50700-63~22.04) but 1.0.23.50701-98~20.04 is to be installed

Depends: rocrand (= 2.10.17.50700-63~22.04) but 2.10.17.50701-98~20.04 is to be installed

Depends: rocsolver (= 3.23.0.50700-63~22.04) but 3.23.0.50701-98~20.04 is to be installed

Depends: rocsparse (= 2.5.4.50700-63~22.04) but 2.5.4.50701-98~20.04 is to be installed

E: Unable to correct problems, you have held broken packages.

Fix: uninstall each package manually and start the process over.

Useful commands

Here is a list of some helpful commands to ensure everything registered correctly…

Check GPUs

lspci -nn | grep -E 'VGA|Display'

inxi -G

sudo apt install inxi

rocminfo | grep gfx

ROCm version check

apt show rocm-libs -a

dpkg -l | grep rocm

This blog is focused on AMD ROCm, but here is a link to CUDA for those interested in Nvidia solution.

[CUDA Toolkit 12.2 Downloads | NVIDIA Developer](NVIDIA Developer)

2. Install Stable diffusion WebUI with Automatic1111

- [Install and Run on AMD GPUs](AUTOMATIC1111/stable-diffusion-webui Wiki)

- [Install and Run on NVidia GPUs](AUTOMATIC1111/stable-diffusion-webui Wiki)

3. Move your CKPT or SafeTensors files to the models/Stable-diffusion folder.

Be sure to give your models meaningful filenames.

4. Install PyTorch

Install BitsandBytes

Start Stable Diffusion

Here is the command we used to run the service locally.

./webui.sh --precision full --no-half --skip-torch-cuda-test --server-name 0.0.0.0 --no-gradio-queue

And you're in!

Here is the command to use as a service and allow URL access.

nohup ./webui.sh --listen --xformers --administrator --no-gradio-queue --device-id 0 --port 7860 &> nohup.sd.7860.1004 &

Note on Multiple GPU Utilization

Stable Diffusion (SD) does not inherently support distributing work across multiple GPUs. However, to harness the power of multiple GPUs, you can launch multiple instances of webui.sh and assign a specific GPU (e.g., --device-id 0 or --device-id 1) to each instance.

Testing Your Setup

To test your Stable Diffusion server, visit http://your_ip_address:7860. Note that 7860 is the default port.

Tailoring Your Deployment

Discover other models that suit your style and learn to adjust the resolution, sampling steps, and more. This customization ensures that the output aligns perfectly with your creative vision. For alternative models that may better suit your image style, visit civitai.com.

Unleash Your Creativity

With the steps outlined in this guide, you are now fully equipped to start generating fantastic digital imagery. Stable Diffusion, tailored to your specific needs and deployed on your own hardware, enables limitless possibilities for image creation. Whether for professional projects, artistic endeavors, or personal exploration, the world of AI-generated imagery is now at your fingertips. Start creating, and watch as your ideas transform into visual realities.

Leveraging AMAX for Enhanced Stable Diffusion Setup

Enhance your Stable Diffusion deployment with AMAX's advanced hardware and expertise. Our specialized IT infrastructure is designed to maximize AI application efficiency, ensuring optimal performance for image generation. Rely on AMAX for not only powerful hardware but also expert guidance in configuring and managing your Stable Diffusion environment, tailored to your specific needs for a streamlined and effective experience.

Contact Us

For guidance on designing a system to build ROCM applications at scale, please contact us.