NVIDIA's latest innovation in Artificial Intelligence (AI) and High-Performance Computing (HPC) arrives with the NVIDIA H200 Tensor Core GPU, a successor to the immensely popular NVIDIA H100. The NVIDIA H100 has been the top choice for AI applications in the past year, known for its exceptional performance and efficiency in parallel processing required for AI training and inferencing. As businesses continuously seek technological advancement, the H200, boasting significant increases in both memory capacity and bandwidth, is set to become the new benchmark in GPU technology. With the NVIDIA H200, NVIDIA aims to replicate and surpass the NVIDIA H100's market success, offering enterprises a compelling reason to upgrade for cutting-edge AI and HPC applications.

NVIDIA H200 Overview

- First GPU to incorporate HBM3e memory, offering 141 GB at 4.8 TB/s

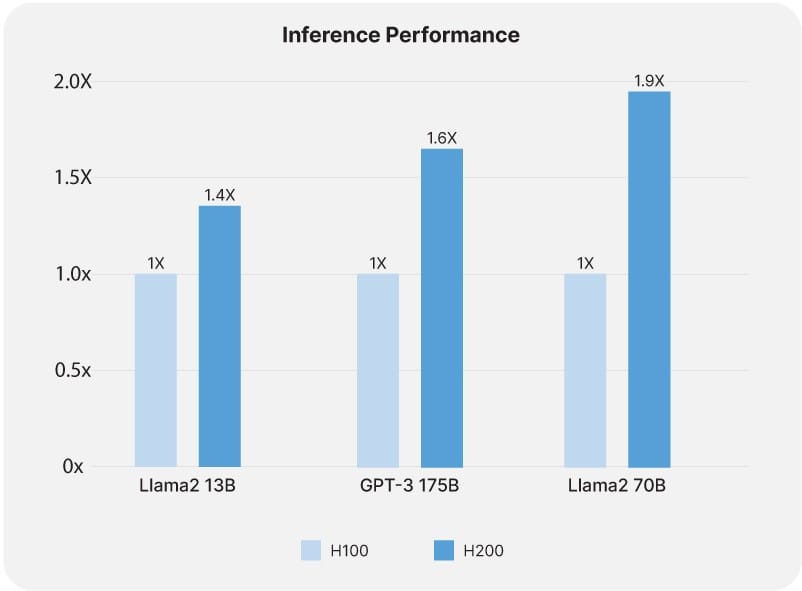

- 1.6X faster performance in GPT-3 175B Inference and up to 110X faster in specific HPC applications.

- Enhanced energy efficiency, and reduced total cost of ownership (TCO)

- 2X faster inference speed for large language models like Llama2

Key Advancements in NVIDIA H200 over NVIDIA H100

The NVIDIA H200 showcases significant advancements over the NVIDIA H100, primarily in its memory capacity and bandwidth. It is the first GPU to incorporate 141GB of HBM3e memory offering a remarkable 4.8TB/s bandwidth. This enhancement is not just about larger storage; it's about the ability to process and transfer data at unprecedented speeds. Such capability is vital for handling the extensive data requirements of modern AI models and HPC tasks, where rapid access to large datasets is crucial.

In terms of performance, the NVIDIA H200 significantly outperforms its predecessor, delivering 1.9X faster inferencing for Llama2 70B and a 1.6X increase for GPT-3 175B.

For HPC applications, the NVIDIA H200 offers additional improvements, with 110x faster HPC application performance than traditional CPU-based solutions and up to 2X performance increase over previous generations. These enhancements in speed and efficiency do not come at the cost of higher energy consumption. In fact, the NVIDIA H200 maintains energy efficiency and cost-effectiveness, aligning with the growing emphasis on sustainable and economical computing solutions.

Impact on AI and High-Performance Computing

The enhanced memory and speed of the NVIDIA H200 have a substantial impact on AI applications, particularly Large Language Models (LLMs). The 141GB HBM3e memory and 4.8TB/s bandwidth facilitate faster processing of complex datasets, which is essential for training and running sophisticated AI models like GPT-3 or Llama2. This capability enables more efficient handling of the vast amounts of data these models require, leading to quicker training times and more accurate results. The increased processing speed also allows for real-time analysis and decision-making, essential in AI-driven applications such as autonomous vehicles and advanced robotics.

In the realm of high-performance computing (HPC), the NVIDIA H200's advancements accelerate a variety of applications. For example, in scientific research and simulations, the GPU's ability to quickly process large datasets can significantly reduce the time required for complex computations. This speed is critical in areas like climate modeling, genomic sequencing, and physics simulations, where large volumes of data need to be analyzed rapidly. By enabling faster and more efficient data processing, the NVIDIA H200 aids researchers in obtaining results more quickly, facilitating breakthroughs in various scientific fields.

An additional advantage of the NVIDIA H200 is its focus on sustainable computing. Despite its increased performance capabilities, the GPU maintains energy efficiency, addressing the growing need for environmentally friendly technology solutions. This aspect is particularly important for large-scale computing facilities, where energy consumption and heat generation are major concerns. The NVIDIA H200's energy-efficient design helps reduce the overall carbon footprint of computing operations, making it a responsible choice for future-focused organizations.

AMAX's Role in Integrating New Technologies

At AMAX, we specialize in designing custom IT infrastructures that fully leverage the latest technology like the NVIDIA H200 GPU. Our solutions are tailored to meet the specific demands of AI, ensuring optimal use of the NVIDIA H200’s capabilities. We understand that each client's needs are unique, which is why we design our systems computing power, memory, and storage for your specific application.

Our expertise extends to advanced liquid cooling technologies, vital for maintaining the efficiency and longevity of high-performance GPUs like the NVIDIA H200. This approach is key to handling the heat output of such powerful units, ensuring stable and sustainable operation in high-density computing environments.

Are you ready to elevate your AI and HPC capabilities with the latest technology? Contact AMAX today to explore how our customized solutions, integrated with cutting-edge GPUs like the NVIDIA H200, can transform your computational infrastructure.