Your Personal AI Supercomputer

The increasing size and complexity of generative AI models is making development efforts on local systems challenging. Prototyping, tuning, and inferencing large models locally requires large amounts of memory and significant compute performance. As enterprises, software providers, government agencies, startups, and researchers staff up AI development efforts, the need for AI compute resources continues to grow.

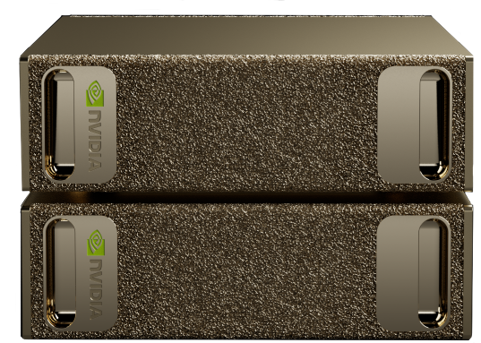

NVIDIA DGX Spark™ belongs to a new class of computers designed from the ground up to build and run AI. Powered by the NVIDIA GB10 Grace Blackwell Superchip, DGX Spark delivers up to 1 petaFLOP1 of performance to power AI workloads. With 128 GB of coherent unified system memory, developers can experiment, fine-tune, or inference the latest generation of reasoning AI models from DeepSeek, Meta, Google, and others of up to 200B parameters. Plus, NVIDIA ConnectX™ networking can connect two DGX Spark AI computers to enable inference on models up to 405B parameters.

Contact AMAX to speak with a solutions engineer and plan your development-to-deployment path.

This post outlines five lesser known advantages of NVIDIA DGX Spark that make it more than just a compact GPU system. Each feature supports long term scalability, reduces early development friction, and provides technical value beyond what most workstations can offer.

1. NVIDIA DGX Spark is based on the NVIDIA GB10 Superchip

A Practical Platform for AI Development

At the heart of DGX Spark is the new GB10 Grace Blackwell Superchip, based on the Grace Blackwell architecture and optimized for a desktop form factor. GB10 features a powerful Blackwell GPU with fifth-generation Tensor Cores and FP4 support, delivering up to 1 petaFLOP of AI compute. GB10 also includes a high-performance Grace 20-core Arm CPU to supercharge data preprocessing and orchestration, speeding up model tuning and real-time inferencing. The GB10 Superchip uses the NVLink™-C2C to deliver a CPU+GPU coherent memory model with 5X the bandwidth of PCIe Gen 5.

With 128 GB of unified system memory and support for the FP4 data format, DGX Spark can support AI models of up to 200B parameters, enabling AI developers to prototype, fine-tune and inference the latest generation of AI reasoning models—such as DeepSeek R1 distilled versions up to 70 billion parameters—on their desktop.

Compatible with Common Development Tools

NVIDIA DGX Spark supports a wide range of standard tools, with major frameworks like PyTorch and TensorFlow are fully supported. on Arm with little to no modification. Data science tools such as RAPIDS and Dask also run natively, enabling teams to test data processing, feature engineering, and evaluation pipelines within familiar environments. Developers can register to access NVIDIA NGC to access GPU-accelerated software with performance optimized containers, pretrained AI models, and industry-specific SDKs.

2. You can run 200B parameter models locally with full data control

Inference Support for Models up to 200 Billion Parameters

NVIDIA DGX Spark gives developers the ability to run inference on some of the largest publicly available AI models using local hardware. With 128 GB of coherent unified memory and support for FP4 with sparsity, the system can load and execute models up to 200 billion parameters. This includes models such as LLaMA 2, DeepSeek-V2, and Mistral, along with in-house foundation models built for private applications.

Running inference locally allows teams to evaluate performance, measure latency, and test prompt behavior without needing to allocate external GPU instances. It also creates space for deeper experimentation, such as comparing quantization strategies or observing output variation across different fine-tuned weights.

Secure Development with Data Kept On-Prem

For many organizations, sending data to the cloud introduces risk or violates internal policy. NVIDIA DGX Spark makes it possible to keep sensitive datasets in a local, secure environment while still testing and tuning high-capacity models. Developers can work with production data during model validation, log results directly to local systems, and audit outputs without moving assets into third party platforms.

This approach is especially relevant for teams in industries with compliance requirements or internal review processes such as healthcare, government, finance, or defense. NVIDIA DGX Spark supports a full development cycle where all data stays within your control.

Easy Migration to Accelerated AI Infrastructure

Leveraging the NVIDIA AI platform software architecture makes it possible for DGX Spark users to seamlessly move their models from their desktop to DGX Cloud or any accelerated cloud or data center infrastructure with virtually no code changes, making it easier than ever to prototype, fine-tune, and iterate. AMAX supports this process with deployment planning, integration services, and validation support to help customers move from initial development into full on-prem deployment when ready.

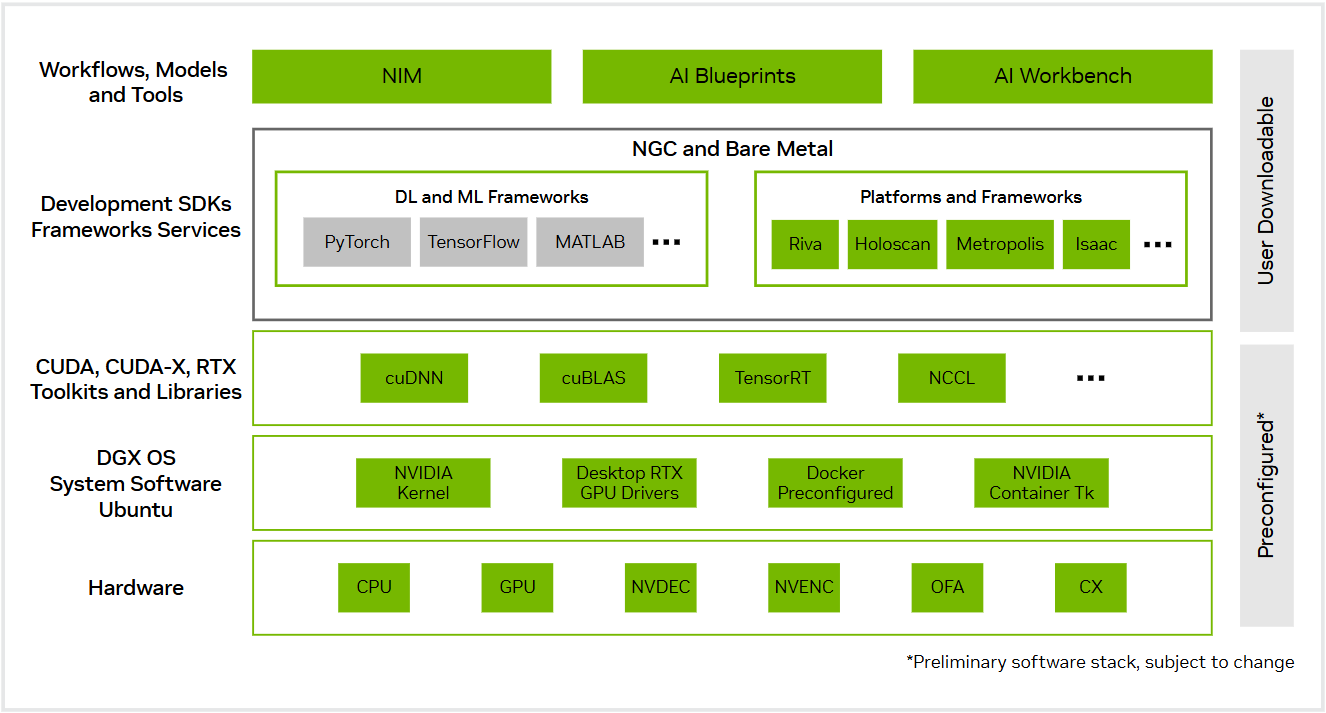

3. The NVIDIA AI software stack runs natively on NVIDIA DGX Spark

Preloaded with AI Development Tools

NVIDIA DGX Spark includes key components of the NVIDIA AI software stack, providing a ready-to-use environment for AI development out of the box. The system supports NVIDIA DGX OS, a Linux-based operating system optimized for GPU workloads, along with container runtimes, drivers, and libraries commonly used in deep learning and data science workflows. This allows developers to begin running containers and launching jobs without extensive configuration.

Preinstalled software enables developers to get up and running project quickly. The software environment is maintained by NVIDIA and updated regularly, which helps reduce friction during setup and long-term development.

Optimized Containers and Pretrained Models from NGC

NVIDIA DGX Spark provides access to the NVIDIA NGC catalog, where developers can pull optimized containers for frameworks such as PyTorch, TensorFlow, and JAX. It also includes pretrained models and sample pipelines that are performance tuned for NVIDIA hardware. These resources help teams move faster by offering validated starting points for LLMs, vision models, recommender systems, and other common workloads.

Because these assets align with production-scale infrastructure, developers can test and refine models on DGX Spark with the confidence that the same codebase can be moved to larger compute infrastructure when needed.

Support for Microservices and Workflow Tools

NVIDIA DGX Spark supports modern AI development workflows, including microservice deployment and model serving through NVIDIA Inference Microservices (NIM).

Tools like NVIDIA AI Workbench and Blueprints are also supported, helping standardize experimentation and improve reproducibility. For teams that build internal tools or pipelines using container orchestration, NVIDIA DGX Spark offers a consistent and well-documented platform to develop and test those workloads in advance of larger deployments.

4. Two NVIDIA DGX Spark units can be clustered to support 405B parameter models

Compact Scaling for Demanding Model Sizes

NVIDIA DGX Spark can be directly linked to a second unit using the built-in ConnectX-7 SmartNIC, allowing developers to scale model size and memory capacity beyond what a single system can handle. In this two-node configuration, NVIDIA DGX Spark supports running models with up to 405 billion parameters using FP4 with sparsity. This is valuable for teams working with advanced LLMs or mixture of experts models, where model size can impact output quality, reasoning depth, and token-level control.

Running inference at this scale allows developers to test how performance, accuracy, and latency behave across large parameter counts without relying on external infrastructure. It also provides a more realistic environment to assess model architecture decisions, token window behavior, and memory tradeoffs. For teams planning to move into larger distributed deployments, clustering two NVIDIA DGX Spark systems offers a practical starting point for evaluating scale-related performance characteristics within a local development workflow.

AMAX can help you design and deploy DGX infrastructure that fits your AI roadmap.

5. NVIDIA DGX Spark supports development that scales into larger deployments

Designed to Align with NVIDIA DGX AI Infrastructure

NVIDIA DGX Spark runs on the Grace Blackwell GB10 Superchip, a desktop scaled version of the Grace Blackwell technology found in the datacenter.. This makes it easier for teams developing containerized workloads, training pipelines, and inference services to later scale to larger systems. Developers can work in a consistent environment without needing to rework core components as deployment needs grow.

To give developers a familiar experience, DGX Spark mirrors the same software architecture that powers industrial-strength AI factories. Using the NVIDIA DGX OS with Ubuntu Linux developers can seamlessly deploy to DGX Cloud or any accelerated data center or cloud infrastructure.

Scalable with Support from AMAX

For organizations preparing to move from local prototyping to larger-scale AI infrastructure, AMAX provides expert support across planning, deployment, and system validation. This includes guidance on rack-scale NVIDIA DGX BasePOD deployments and custom liquid-cooled system builds. AMAX helps ensure that teams can build confidently from small-scale development toward production-ready solutions.

Built to Scale with You

NVIDIA DGX Spark offers a practical way to bring large-model development in house, augmenting datacenter and cloud computing resources. With support for Arm-based architectures, 200B+ parameter inference, and the full NVIDIA AI software stack, it provides a clear path from prototyping to production.

Whether you're starting with a single unit or planning for larger DGX deployments, AMAX can help design and support your infrastructure every step of the way.

Contact AMAX to speak with a solutions engineer and plan your development-to-deployment path.