At AMAX, we craft and engineer your enterprise data center or production LLM solution the way you want with NVIDIA MGX™—an open, multi-generational reference architecture focused on accelerated computing for AI. With over 100+ standard configurations, and even more possible with our design frameworks, your ideal data center is now within reach.

Designed for CTOs, Data Scientists, and AI Engineers, our solutions address the complexities of developing high-end AI data centers, empowering enterprises with superior technology.

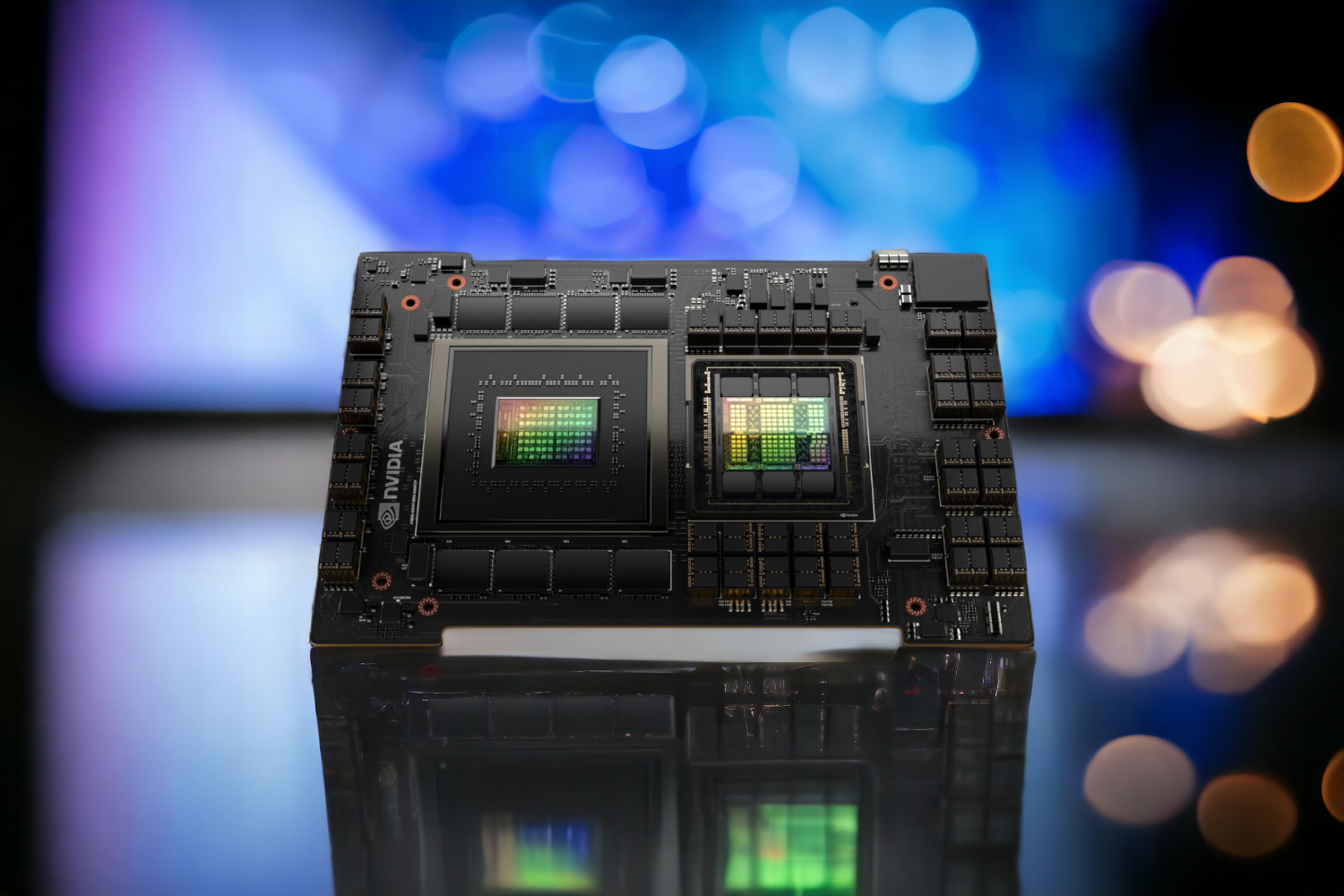

GH200 Specifications

| Feature | Specification |

|---|---|

| CPU | 72-core Grace CPU |

| GPU | NVIDIA H100 Tensor Core GPU |

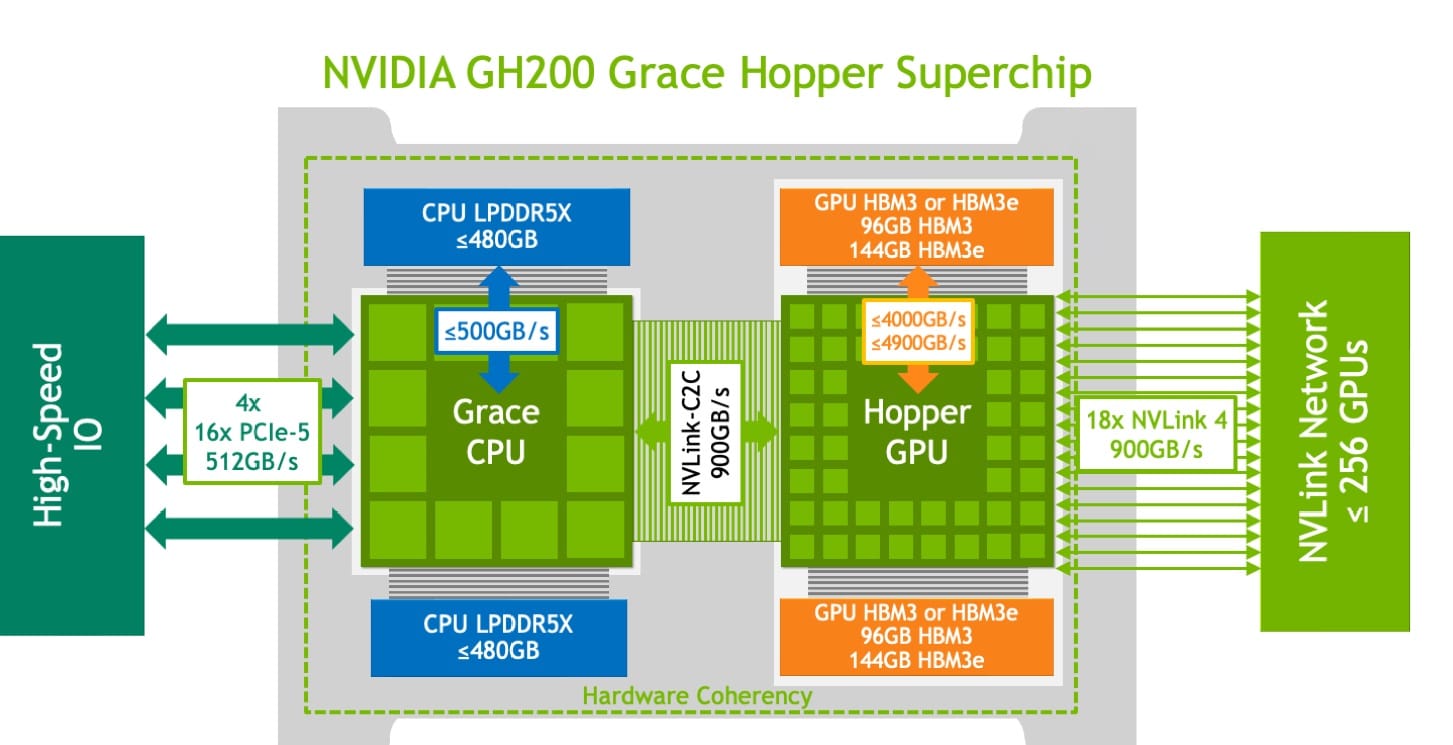

| Memory | Up to 480GB of LPDDR5X memory with ECC |

| HBM3 Support | Supports 96GB of HBM3 or 144GB of HBM3e |

| Total Fast-Access Memory | Up to 624GB |

| NVLink-C2C | 900GB/s coherent memory |

Enhance Your AI Infrastructure

- NVIDIA H100 and GH200 Chips: Providing unparalleled computational power for AI workloads.

- NVIDIA Grace CPU: Offering high performance with exceptional energy efficiency.

- Advanced Cooling Technologies: Ensuring optimal operation and longevity.

NVIDIA GH200's Performance Advantage

- Achieve up to 10x more performance than NVIDIA A100 for data-intensive applications, accelerating breakthroughs in complex problem-solving.

Unified Memory with NVIDIA GH200

- Experience 7x more bandwidth between CPU and GPU. Unified cache coherence and single memory address space simplify programming and improve efficiency.

The NVIDIA MGX platform offers a comprehensive and innovative approach to data center design, addressing key challenges in modern computing environments. Here's a summarized deep dive into its features:

- Modular Design: MGX's architecture allows for over 100 system configurations, enabling rapid adaptation to evolving technology with minimal investment.

- System Flexibility: It supports diverse environments, including hyperscale, edge, and high-performance computing (HPC), adapting to varying data center needs.

- Thermal and Mechanical Solutions: MGX can handle higher GPU thermals through a suite of cooling solutions, addressing the limitations of standard CPU server designs.

- Power Efficiency: It incorporates multiple power delivery designs to meet different system requirements, supporting various architectures and power inputs.

- Comprehensive Ecosystem: The platform consists of modular bays, Host Processor Modules (HPM), and cooling solutions, allowing for a wide range of customizations.

- Partner Benefits: MGX offers significant advantages to ODMs and OEMs, including reduced design and development costs, forward compatibility, and faster market deployment.

Take advantage of AMAX's system integration capabilities to drive innovation with reliability for your enterprise AI solutions. Join us in pioneering the next generation of AI data center technologies.