AI in Business Operations

AI is redefining enterprise operations, streamlining everything from sales and marketing to production and logistics. This shift is enhancing efficiencies and processes at every level of business. Learning the best way to incorporate AI into your enterprise can dramatically increase ROI and a drive competitive advantage for your company.

NVIDIA DGX BasePOD Solutions

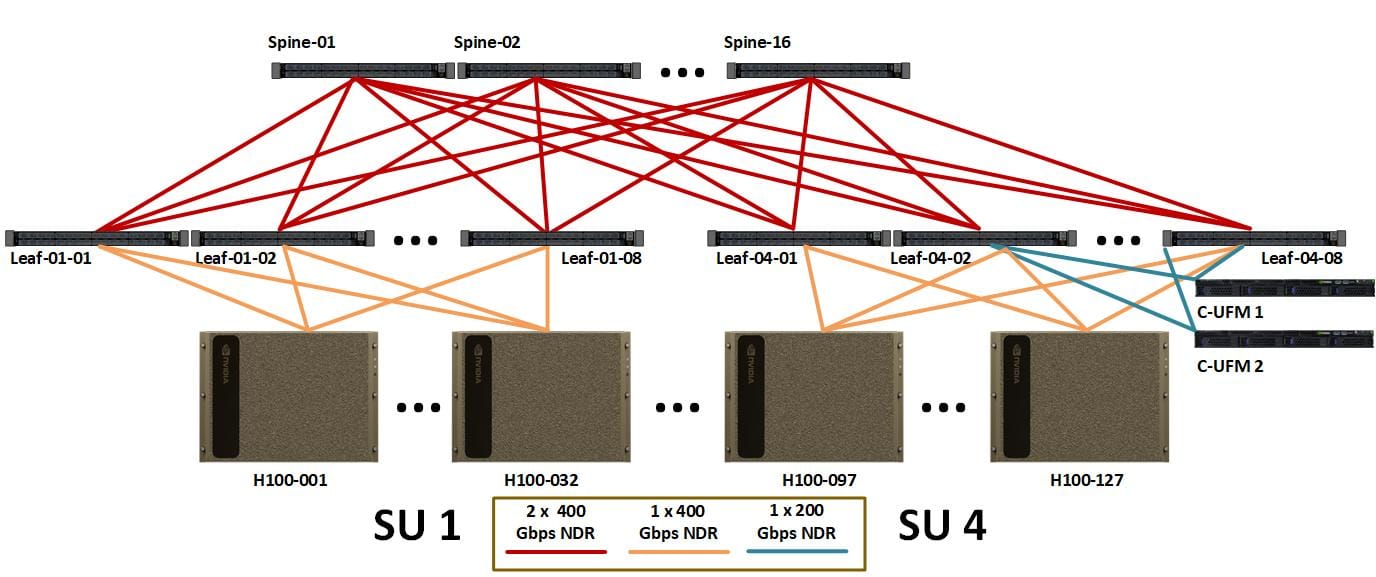

Enterprises looking to modernize operations require AI Accelerator systems that incorporate the latest hardware and software. AMAX deploys NVIDIA DGX BasePOD™ GPU Clusters globally, designing configurations that meet the unique demands of enterprise AI. Our solution architects provide proven system reference designs and recommend comprehensive software suites to guarantee swift AI deployment, removing design and management complexities.

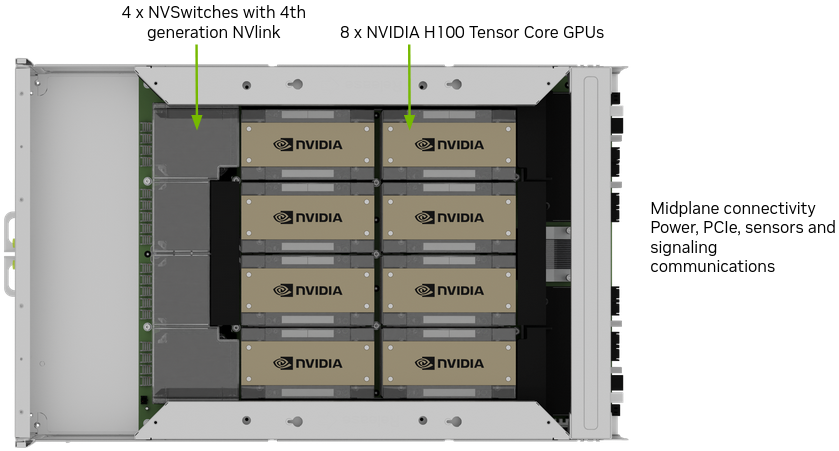

Central to the NVIDIA DGX BasePOD is the NVIDIA DGX™ H100 system, equipped with the NVIDIA H100 Tensor Core GPU. Each NVIDIA DGX configuration is specifically engineered with 8x H100 GPUs to boost AI performance, making it suitable for complex tasks such as Retrieval Augmented Generation (RAG) systems, Inference, and AI Model Training. The high performance and networking capabilities of the advanced GPU ensures the NVIDIA DGX BasePOD delivers exceptional processing power and efficiency for your enterprise AI workload.

NVIDIA DGX H100 System Topology

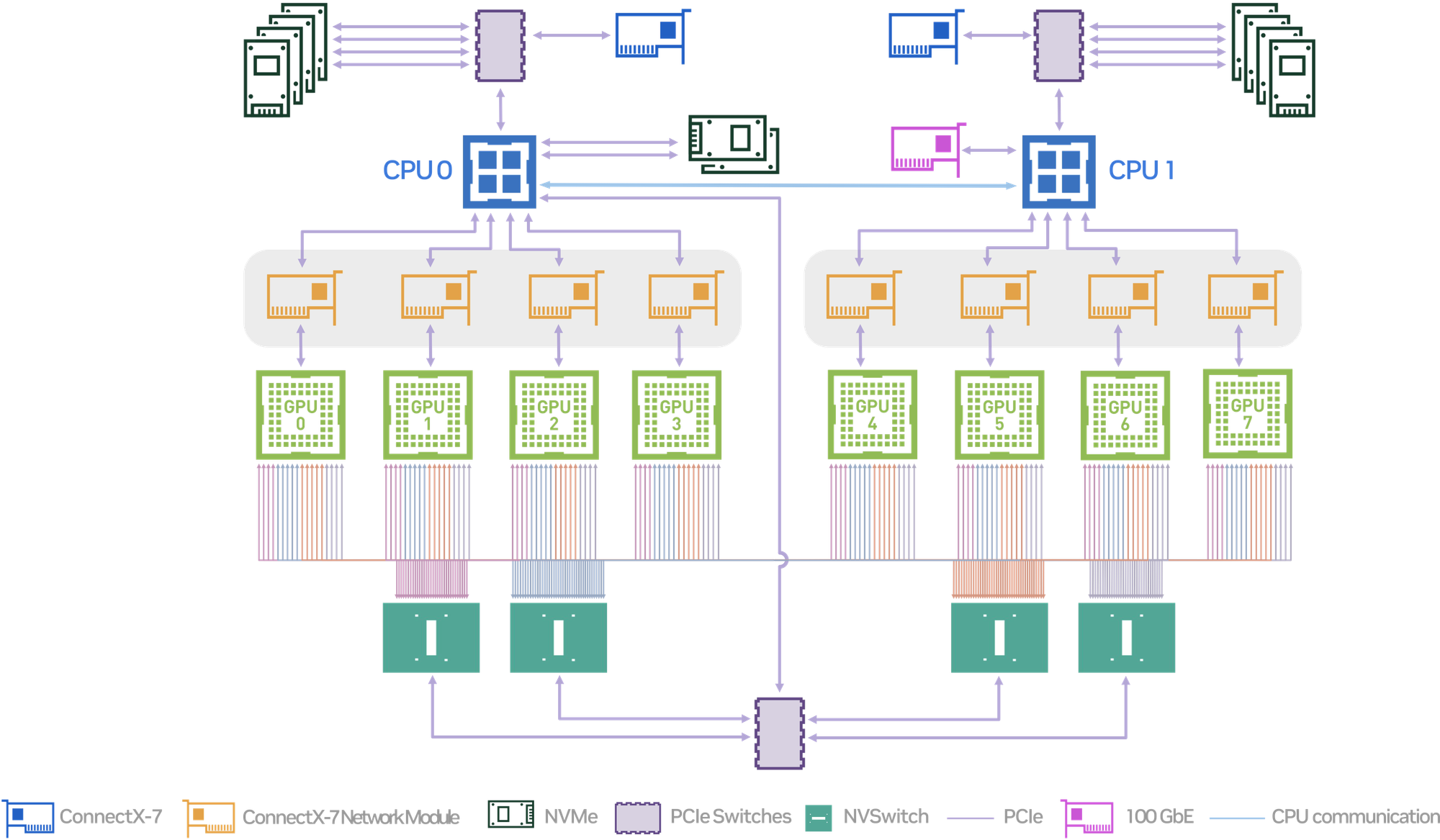

The NVIDIA DGX H100 system is engineered to optimize AI performance through a well-coordinated system topology that effectively manages data flow and processing tasks. At the core of the system are eight NVIDIA H100 Tensor Core GPUs, each connected via fourth-generation NVLink and NVSwitch, enabling up to 900 GB/s of bidirectional GPU-to-GPU bandwidth. This setup allows for rapid data exchanges between GPUs, essential for handling complex AI computations efficiently.

Complementing the GPUs, the system includes ConnectX-7 network interface cards that support network speeds up to 400 Gbps, facilitating swift data movement which is critical for training extensive machine learning models. The system's computational processes are powered by Dual Intel Xeon Platinum 8480C processors alongside 2 TB of system memory, providing the necessary horsepower for demanding AI tasks.

Storage is handled by 30 terabytes of high-speed NVMe SSDs, ensuring quick data retrieval and storage, further enhancing the system’s performance. The system's architecture is interconnected through high-speed PCIe switches, which seamlessly integrate the GPUs, CPUs, and storage components. This integrated approach ensures that the NVIDIA DGX H100 can efficiently manage and process large datasets, making it suitable for enterprise-scale AI applications across various fields like natural language processing and data analytics.

Cluster Designs for Optimal AI Performance

AMAX adapts NVIDIA’s reference architectures to meet your specific requirements, delivering a customized rack level solution optimized for AI workloads. This approach enhances speed and scalability at a better cost efficiency. Our process includes a detailed assessment of needs, careful component selection, performance optimization, rigorous testing, and system bring-up. The result is a high-performance cluster infrastructure, well architected to your AI goals, reflecting our commitment to providing effective solutions.

Comprehensive Support for Continuous Optimization

At AMAX, our services extend beyond "setup". We provide remote access capabilities and post-sales engineering support, ensuring your solution works for you. We are committed to maximizing your deployment's uptime, we address evolving needs and continually optimize your cluster's performance. This allows you to focus on core business objectives with peace of mind, knowing your infrastructure is in expert hands.

- Simplified Deployment: The NVIDIA DGX H100 BasePOD’s turnkey nature drastically cuts down the time and complexity usually required to get AI systems operational, enabling efficient and straightforward setup.

- Scalability: Our customers benefit from the modular design of the NVIDIA DGX H100 BasePOD, which allows for easy expansion in line with growing demands, ensuring the infrastructure can evolve as business needs change.

- Reliability and Performance: Engineered for demanding AI tasks, the NVIDIA DGX H100 BasePOD offers exceptional reliability and high performance, managing complex computations with ease.

Deploying AI with NVIDIA AI Workbench

NVIDIA AI Workbench is a platform designed to facilitate the development and deployment of artificial intelligence models, particularly focusing on generative AI and large language models (LLMs). It provides developers and data scientists with an integrated suite of tools and frameworks that help streamline the AI workflow, from model training and optimization to deployment and scaling.

The video demo guides you through the initial steps of initiating projects by cloning the SDXL and Llama 2 models directly from GitHub. You'll learn how to personalize these models with your own datasets, showcasing NVIDIA AI Workbench's capability to facilitate a smooth progression from local development environments to extensive, scalable cloud or data center deployments.

AMAX Solutions for Streamlined AI Deployment

AMAX provides NVIDIA DGX BasePOD configurations with proven ecosystem storage solutions to simplify the deployment of accelerated, on-prem AI. With AMAX you can choose from leading partners like DDN, WEKA, Vast, or even design a custom solution to meet your storage needs.

The NVIDIA DGX H100 BasePOD provides a quick and cost effective way for enterprises aiming to integrate AI into their buisness. The design flexibility, support services, and management options caters to the growing market demand for reliable AI infrastructure, offering rapid deployment and instant operational readiness. This GPU cluster solution makes the NVIDIA DGX H100 BasePOD a frontrunner in promoting rapid enterprise AI adoption, ensuring organizations can effectively utilize advanced AI capabilities.